Creating document-based apps using SwiftUI

Document-based apps are very common both on the Mac and on iOS. Rather than having an app manage its contents using a database or a similar solution — in which case it’d be called a “shoebox” app — the user is in control of which files that are read and written to.

This year, we saw the introduction of 100% SwiftUI-based apps, making it possible to express an entire app in a declarative way, without an app delegate or UIHostingController. It’s so simple that an entire app can fit in a tweet. Don’t believe me? The code below is a functional SwiftUI app:

@main

struct MiniAppApp: App {

@AppStorage("text") var text = ""

var body: some Scene {

WindowGroup {

TextEditor(text: $text).padding()

}

}

}

That’s a simple app that lets you edit some text and create new windows, but with the new SwiftUI app model, we can also create document-based apps in a similar way.

Declaring your document types

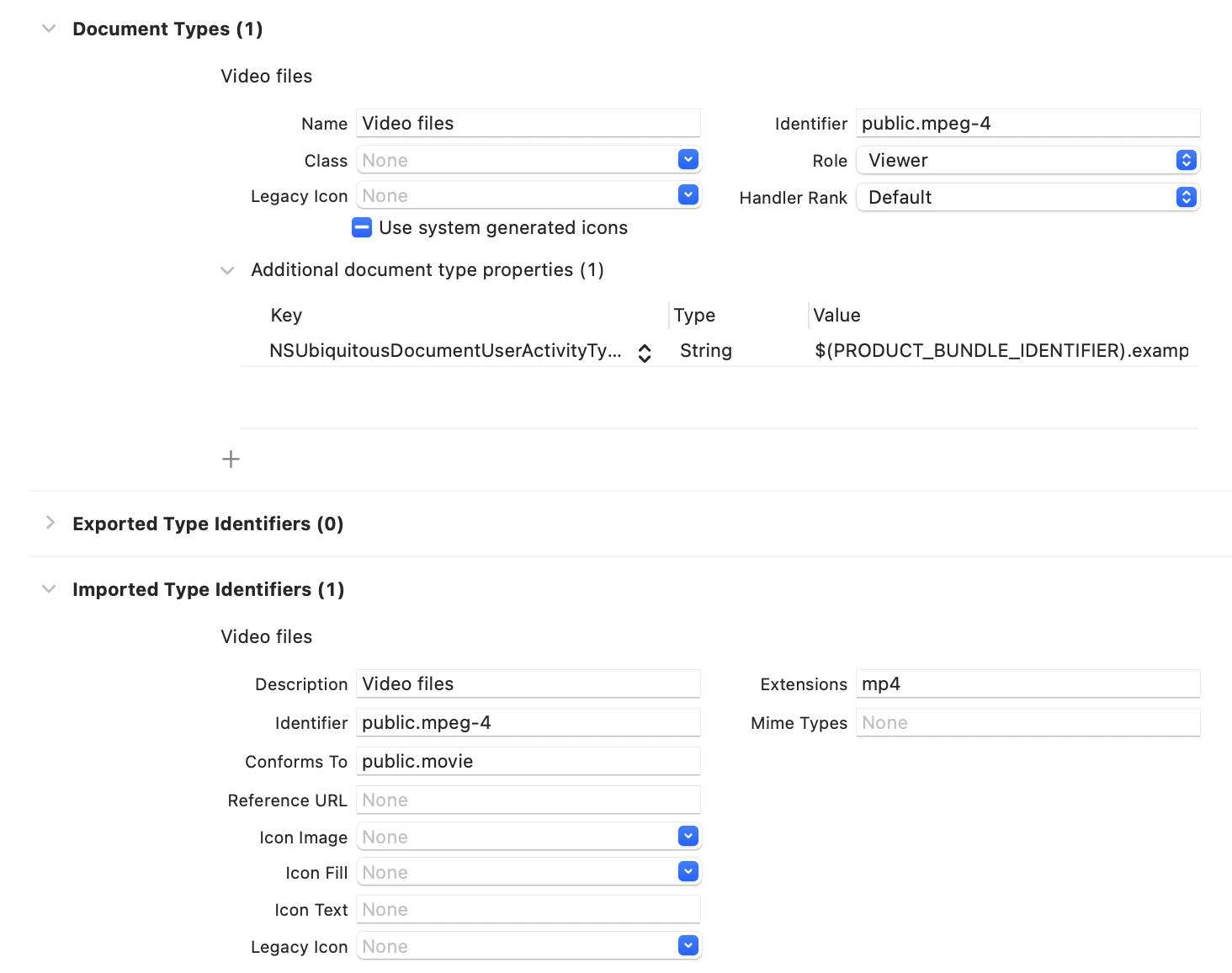

Every document-based app is going to operate with specific file types. To configure the types of files that an app can work with, they have to be declared in the app’s Info.plist. Xcode lets us edit that information in the Info tab of the app’s target:

In this article, I’ll create an app that can add a line of text on top of a video that can then be exported, so it will be able to open mp4 video files. In the Document Types section, I’ve declared a type called “Video files”, with the identifier public.mpeg-4. You can find the uniform type identifier for a given file type in the documentation or code completions for UTType.

Besides that, I’ve also declared the file type in the Imported Type Identifiers section, because this isn’t a type that I have created myself — it’s a public, standard file type.

Implementing a document model

Now that the document type has been declared, it’s time to create the document model that’s going to represent that file at runtime. The FileDocument protocol lets us do just that for value types. If you need to represent your documents as a reference type, then you can use the ReferenceFileDocument protocol instead.

extension UTType {

static var videoFiles: UTType {

UTType(importedAs: "public.mpeg-4")

}

}

struct VideoMemeDocument: FileDocument {

var title = "Enter Title Here"

static var readableContentTypes: [UTType] { [.videoFiles] }

init(fileWrapper: FileWrapper, contentType: UTType) throws {

// Read the file's contents from fileWrapper.regularFileContents

}

func write(to fileWrapper: inout FileWrapper, contentType: UTType) throws {

// Create a FileWrapper with the updated contents and set fileWrapper to it.

// This is possible because fileWrapper is an inout parameter.

}

}

For some file types, it would be perfectly reasonable to make your document type conform to Codable and use JSONEncoder and JSONDecoder to read and write data in init(fileWrapper:contentType:) and write(to:contentType:). I didn’t do it for my sample app because it wasn’t a good fit for the type of content that I’m dealing with (video files).

Your document type declares the types of files that it can read from by overriding the static property readableContentTypes and returning an array of UTType values. You might want to override the writableContentTypes property as well, if the types of files that your document can write to are different.

Notice how I declared the public.mpeg-4 type by initializing UTType with the importedAs: option. That’s because this is a file type that my app doesn’t own, it’s just supporting a public file type. If your app declares its own, custom file type, then you need to declare it within the Exported Type Identifiers setting in your target’s Info.plist, and then use UTType(exportedAs:) to instantiate it.

Putting the app together

Now that the model has been taken care of, it’s time to put the actual app and UI together. The app declaration looks like this:

@main

struct VideoMemeApp: App {

var body: some Scene {

DocumentScene()

}

}

DocumentScene is the type that’s going to provide my app’s document UI and connect that UI with its underlying document model:

struct DocumentScene: Scene {

var body: some Scene {

DocumentGroup(viewing: VideoMemeDocument.self) { file in

ContentView(document: file.$document)

}

}

}

Above I’m creating a DocumentGroup — which is a type of scene — that can be used to view documents of the type VideoMemeDocument. In my sample project, it doesn’t really make sense for an empty document to be created, since you can’t do much without a video file to begin with. But in many document-based apps, it makes sense for a new empty document to be created, in which case you’d use init(newDocument:editor:) to create your DocumentGroup, passing an instance of VideoMemeDocument instead of the type itself.

Finally, my ContentView implementation displays the video file that was opened using the new VideoPlayer view from AVKit, and puts a TextField on top of the video so that the user can enter the text that they would like to overlay on top of the rendered video:

struct ContentView: View {

@Binding var document: VideoMemeDocument

var body: some View {

ZStack {

VideoPlayer(player: document.viewModel.player)

VStack {

TextField("Text", text: $document.title)

.foregroundColor(.white)

.shadow(radius: 2)

.font(.system(.largeTitle, design: .rounded))

.textFieldStyle(PlainTextFieldStyle())

.multilineTextAlignment(.center)

.padding(.top, 60)

.padding()

Spacer()

}

}

}

}

Adding a menu command

Now my document model has been defined, my app can open and display videos, and the user can enter text, but there’s no command to export the video containing the text yet. Fortunately, adding menu commands to a scene can be done quite easily by using the commands modifier:

struct DocumentScene: Scene {

var body: some Scene {

DocumentGroup(viewing: VideoMemeDocument.self) { file in

ContentView(document: file.$document)

}.commands {

CommandMenu("Video") {

Button("Export…") {

// Export the video here

}

.keyboardShortcut("e", modifiers: .command)

}

}

}

}

The commands modifier returns commands that will be added to the app’s main menu for macOS apps. In my app, I’ve added a “Video” menu with an “Export” item, which is a Button with a keyboard shortcut so that users can export videos by pressing ⌘E.

Here is where I hit a bit of a bump while developing my app. I’m used to creating document-based apps on the Mac with AppKit, in which case it’s common to use the responder chain to propagate the menu commands to the currently active document window.

There is no responder chain — or at least it’s not accessible — in SwiftUI apps, so the solution I found was to use a PassthroughSubject to send the menu command to my ContentView from my DocumentScene:

struct DocumentScene: Scene {

private let exportCommand = PassthroughSubject<Void, Never>()

var body: some Scene {

DocumentGroup(viewing: VideoMemeDocument.self) { file in

ContentView(document: file.$document)

.onReceive(exportCommand) { _ in

file.document.beginExport()

}

}.commands {

CommandMenu("Video") {

Button("Export…") {

exportCommand.send()

}

.keyboardShortcut("e", modifiers: .command)

}

}

}

}

The exportCommand is a PassthroughSubject that doesn’t send any content and can’t fail, which is why I’ve declared it with the type <Void, Never>. Within my button’s action, I just call exportCommand.send() to cause it to emit an event, which I’m then catching by using the onReceive view modifier. In the onReceive callback, I'm calling the beginExport method in my document model, which is the method that I have implemented to export the video with the current text.

As I mentioned, this was the solution I found to send commands from menus to my document’s UI context. If you think there’s a better solution than the one that I have presented, then feel free to tell me about it.

Here’s a quick demo of the app that I made.

If you'd like to see the resulting meme, I have posted it on Twitter for your appreciation.

I didn’t go into detail about the video rendering aspects of my sample app, which are outside the scope of this article, but you can check out the full source code on GitHub.

Conclusion

Being able to create entire apps in SwiftUI opens up a world of possibilities, and makes it easier than ever to get started with developing for Apple’s platforms.

This was my last article for this year’s edition of WWDC by Sundell & Friends. I hope that you liked reading my content over the course of this week, and that it has inspired you to explore what’s new on Apple’s platforms and their SDKs.

See you next year 👋🏻

Instabug

InstabugTired of wasting time debugging your iOS app? Cut down debugging time by up to 4x with Instabug’s SDK. Get complete device details, network logs, and reproduction steps with each bug report, and it only takes a line of code to setup! Start now and get three months off, exclusively for readers of this site.